I’ve written a lot of stuff over the last few years about information architecture. And I’m working on writing more. But recently I’ve realized there are some things I’ve not actually posted publicly in a straightforward, condensed manner. (And yes, the post below is, for me, condensed.)

WTF is IA?

1. Information architecture is not just about organizing content.

- In practice, it has never been limited to merely putting content into categories, even though some very old definitions are still floating around the web that define it as such. (And some long-time practitioners are still explaining it this way, even though their actual work goes beyond those bounds.)

- Every competent information architecture practitioner I’ve ever known has designed for helping people make decisions, or persuade customers, or encourage sharing and conversation where relevant. There’s no need to coin new things like “decision architecture” and “persuasion architecture.”

- This is not to diminish the importance and complexities involved with designing storage and access of content, which is actually pretty damn hard to do well.

2. IA determines the frameworks, pathways and contexts that people (and information) are able to traverse and inhabit in digitally-enabled spaces.

- Saying information architecture is limited to how people interact with information is like saying traditional architecture is limited to how people interact with wood, stone, concrete and plastic.

- That is: Information architecture uses information as its raw material the same way building architecture uses physical materials.

- All of this stuff is essentially made of language, which makes semantic structure centrally important to its design.

- In cyberspace, where people can go and where information can go are essentially the same thing; where and how people can access information and where and how people can access one another is, again, essentially the same thing. To ignore this is to be doing IA all wrong.

3. The increase of things like ubiquitous computing, augmented reality, emergent/collective organization and “beyond-the-browser” experiences make information architecture even more relevant, not less.

- The physical world is increasingly on the grid, networked, and online. The distinction between digital and “real” is officially meaningless. This only makes IA more necessary. The digital layer is made of language, and that language shapes our experience of the physical.

- The more information contexts and pathways are distributed, fragmented, user-generated and decentralized, the more essential it is to design helpful, evolving frameworks, and conditional/responsive semantic structures that enable people to communicate, share, store, retrieve and find “information” (aka not just “content” but services, places, conversations, people and more).

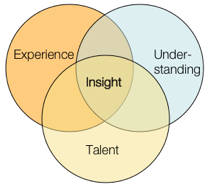

- Interaction design is essential to all of this, as is graphical design, content strategy and the rest. But those things require useful, relevant contexts and connections, semantic scaffolding and … architecture! … to ensure their success. (And vice versa.)

Why does this need to be explained? Why isn’t this more clear? Several reasons:

1. IA as described above is still pretty new, highly interstitial, and very complex; its materials are invisible, and its effects are, almost by definition, back-stage where nobody notices them (until they suck). We’re still learning how to talk about it. (We need more patience with this — if artists, judges, philosophers and even traditional architects can still disagree among one another about the nature of their fields, there’s no shame in IA following suit.)

2. Information architecture is a phrase claimed by several different camps of people, from Wurmanites (who see it as a sort of hybrid information-design-meets-philosophy-of-life) to the polar-bear-book-is-all-I-need folks, to the information-technology systems architects and others … all of whom would do better to start understanding themselves as points on a spectrum rather than mutually exclusive identities.

3. There are too many legacy definitions of IA hanging around that need to be updated past the “web 1.0” mentality of circa 2000. The official explanations need to catch up with the frontiers the practice has been working in for years now. (I had an opportunity to fix this with IA Institute and dropped the ball; glad to help the new board & others in any way I can, though.)

4. Leaders in the community have the responsibility to push the practice’s understanding of itself forward: in any field, the majority of members will follow such a lead, but will otherwise remain in stasis. We need to be better boosters of IA, and calling it what it is rather than skirting the charge of “defining the damn thing.”

5. Some leaders (and/or loud voices) in the broader design community have, for whatever reason, decided to reject information architecture or, worse, continue stoking some kind of grudge against IA and people who identify as information architects. They need to get over their drama, occasionally give people the benefit of the freakin’ doubt, and move on.

Update:

This has generated a lot of excellent conversation, thanks!

A couple of things to add:

After some prodding on Twitter, I managed to boil down a single-statement explanation of what information architecture is, and a few folks said they liked it, so I’m tacking it on here at the bottom: “IA determines what the information should be, where you and it can go, and why.” Of course, the real juice is in the wide-ranging implications of that statement.

Also Jorge Arango was awesome enough to translate it into Spanish. Thanks, Jorge!